Multi-Dimensional Spectral Process for Cepstral Feature Engineering & Formant Coding

Abstract

Ahmad Z. Hasanain, Muntaser M. Syed, Veton Z. Kepuska, Marius C. Silaghi

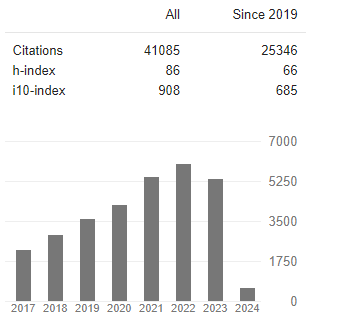

The fundamental frequency feature is essential for Automatic Speech Recognition because its patterns convey a paralanguage and its tuning normalizes other speech features. Human speech is multidimensional because it is minimally represented by three variables: the intonation (or pitch), the formants (or timbre), and the speech resolution (or depth). These variables represent the hidden states of the local glottal variation, the vocal tract response, and the frequency scale, respectively. Computing them one by one is not as efficient as computing them together, so this article introduces a new speech feature extraction approach. The article is introductory; it focuses on the basic concepts of our new approach and does not elaborate on all applications. It demonstrates that the unit of a cepstral value, which is a spectral value of spectrums, is a unit of acceleration since its discrete variable, the quefrency, can be expressed in Hertz-per-microsecond. The article shows how to produce refined voice analysis from robust estimates and how to reconstruct speech signals from feature spaces. And it concludes that the pitch track of the new approach is as good as two open-source pitch extractors. Combining multiple processes, attenuating background noises, and enabling distant-speech recognition, we introduce the Speech Quefrency Transform (SQT) approach as well as multiple quefrency scales. SQT is a set of frequency transforms whose spectral leakages are controlled per a frequency-modulation model. SQT captures the stationarity of time series onto a hyperspace that resembles the cepstrogram when it is reduced for pitch track extraction.