Review Article - (2024) Volume 5, Issue 4

Pulse Modulated Neural Nets with Adaptive Temporal Windows for Enhanced Video Perception

Received Date: Oct 24, 2024 / Accepted Date: Nov 04, 2024 / Published Date: Dec 10, 2024

Copyright: ©2024 Greg Passmore. This is an open-access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.

Citation: Passmore, G. (2024). Pulse Modulated Neural Nets with Adaptive Temporal Windows for Enhanced Video Perception. Adv Mach Lear Art Inte, 5(4), 01-26.

Abstract

A key challenge in modern artificial intelligence is the limited awareness of temporal dynamics. This is especially evident in AI's struggles with video animation and interpreting sequential frames or time-dependent data. This paper presents a new method for modulating neuron spikes to synchronize with incoming frames, addressing the temporal limitations of voltage AI models.

This approach involves a specialized class of neuron, similar to Wolfgang Maass's liquid state machine concept. However, our model differs significantly in its synchronization strategy. Neurons are synchronized with frame import, incorporating a flexible time window that enables spike modulation ranging from 1 to 1024 spikes per frame. This adaptability is crucial for accurately processing and interpreting time-based data.

The main focus of this paper is to explore the operational principles of these specialized neurons. While the wiring and architectural design of these neurons will be discussed in a future article, this paper aims to establish the foundational understanding of how these neurons function individually and in response to temporal data inputs.

By synchronizing neuron spikes with frame inputs and allowing for variable spike modulation, this method aims to enhance AI's ability to handle time-based data. The proposed neuron model represents a step towards more advanced and temporally aware AI systems, potentially unlocking new capabilities in video animation and other time-sensitive applications.

Biomimicry

In this study, we highlight the neuron as the fundamental unit of granularity in artificial neural networks, aiming to create an advanced neuron model that excels in time-based processing. The rationale behind this approach is rooted in biomimicry, the practice of emulating biological systems and processes in engineering and technology. By closely studying human neuron types and behaviors, we aim to extract valuable insights that can inform the design of artificial neurons with enhanced capabilities for handling temporal information.

The value of biomimicry in this context lies in its potential to bridge the gap between the complex, dynamic functionality of biological neurons and the comparatively static nature of traditional artificial neurons. While classic artificial neuron models are effective in various applications, they often struggle in tasks requiring sophisticated time-based analysis. These conventional models lack the inherent mechanisms for temporal integration and dynamic adaptation found in biological neurons, making them less suitable for processing sequential or time-dependent data, such as video streams.

Biological neurons possess remarkable abilities for encoding, transmitting, and processing temporal information. This includes generating action potentials, synaptic plasticity, and intricate network interactions, all contributing to their ability to adapt to changing stimuli over time. In contrast, classic artificial neurons typically employ static activation functions and do not inherently consider the temporal aspects of input data. As a result, they struggle to effectively capture the nuances and dynamics inherent in time-based processes like video analysis.

Our objective is to leverage the principles of bio mimicry to design a novel neuron model that overcomes these limitations. By incorporating features inspired by the adaptive and temporal processing capabilities of biological neurons, we aim to create artificial neurons that are more proficient in handling timedependent data. This entails integrating mechanisms for temporal sequencing, dynamic threshold adjustment, and synaptic plasticity, which are vital for accurately interpreting and responding to video content.

The development of such a neuron model has the potential to significantly enhance the performance of artificial neural networks in video processing and other time-critical applications. By closely resembling the functionality of biological neurons, these improved artificial neurons could offer greater accuracy, efficiency, and adaptability in tasks involving temporal data analysis.

Neuronal Diversity

The human brain, a marvel of complexity and functionality, comprises approximately 10,000 distinct types of neurons, each with its unique role and characteristics. Broadly speaking, these diverse neuron types can be classified into three main categories: motor neurons, sensory neurons, and interneurons. This categorization is based on the primary functions these neurons serve within the neural network of the brain and body.

Motor neurons play a vital role in transmitting motor information from the brain and spinal cord to the muscles and glands. These neurons serve as the executors of the nervous system, translating neural signals into physical actions and responses. Whether it involves the precise movement of fingers or the regulation of glandular secretions, motor neurons are essential in transforming neural instructions into tangible outputs.

In contrast, sensory neurons are responsible for relaying sensory information from various parts of the body to the brain and spinal cord. These neurons act as the sensory input channels of the nervous system, converting external stimuli such as light, sound, touch, and temperature into electrical signals that can be processed by the brain. Sensory neurons enable the perception of the environment and the body's interaction with it, forming the foundation of our senses.

Interneurons act as intermediaries within the nervous system, transmitting information between different types of neurons. Predominantly found in the brain and spinal cord, interneurons are vital for the integration and processing of information. They form intricate networks that facilitate communication between sensory and motor neurons, as well as between neurons within the brain. Interneurons play a crucial role in reflex actions, higher cognitive functions, and the modulation of sensory and motor pathways.

The diversity of neurons extends beyond their functional classification; neurons also exhibit various shapes and sizes, each tailored to their specific roles. This morphological diversity is evident in the distinct structures of neurons, ranging from the elongated axons of motor neurons that can extend to distant muscles to the intricate dendritic trees of interneurons that enable extensive synaptic connections.

Neuronal Firing

Depolarization in neurons refers to a change in the neuron's membrane potential that renders it less negative relative to the outside. More technically, it signifies a shift in the membrane potential towards a less negative value. This process plays a pivotal role in the generation and propagation of electrical signals, known as action potentials, within neurons.

To comprehend depolarization, it is crucial to first understand the resting membrane potential of neurons. Neurons at rest maintain a negative membrane potential, typically around -70 millivolts (mV), due to differences in ion concentrations inside and outside the cell and the selective permeability of the neuron's membrane to these ions. The sodium-potassium pump primarily establishes this resting potential by actively transporting sodium ions (Naâº) out of the neuron and potassium ions (Kâº) into the neuron.

During depolarization, there is a temporary change in the neuron's membrane permeability, enabling Na⺠ions to enter the cell. This influx of positively charged sodium ions reduces the charge difference across the membrane, causing the membrane potential to become more positive (less negative). If this depolarization reaches a certain threshold, usually around -55 mV, it triggers an action potential.

An action potential is a rapid, transient change in membrane potential that travels along the neuron's axon and is used for transmitting signals over long distances within the nervous system. After depolarization, the neuron undergoes repolarization, where the membrane potential returns to its resting negative value, and hyperpolarization, a state where the membrane potential becomes even more negative than the resting potential. These processes involve the outflow of K⺠ions and the closure of Na⺠ion channels.

In summary, depolarization in neurons is an important step in the process of neural communication, enabling the generation of action potentials that facilitate the transmission of information throughout the nervous system.

Hyperpolarization in neurons refers to a change in the neuron's membrane potential that renders it more negative than the resting membrane potential. This process occurs after an action potential and is an essential aspect of the neuron's return to its resting state.

To delve deeper into this phenomenon, let's consider the sequence of events in an action potential. After a neuron fires an action potential, it undergoes a phase known as repolarization, where the membrane potential returns toward the resting level. This repolarization is primarily due to the closure of sodium ion (Naâº) channels and the opening of potassium ion (Kâº) channels. As a result, K⺠ions flow out of the neuron, driven by both their concentration gradient and the electrical gradient.

Hyperpolarization follows repolarization and is characterized by the membrane potential becoming even more negative than the resting potential. This is mainly because the K⺠channels remain open slightly longer than necessary to reach the resting potential. The continued efflux of K⺠ions out of the neuron increases the negative charge inside the cell, thus causing hyperpolarization.

During hyperpolarization, the neuron is less responsive to stimuli compared to its resting state. This period is known as the refractory period, during which it is more difficult or impossible for the neuron to fire another action potential. The refractory period ensures that action potentials travel in one direction along an axon and that they are discrete events rather than continuous signals.

Once the refractory period is over, the neuron's membrane potential returns to its resting state. This is achieved through the action of the sodium potassium pump (Naâº/Kâº-ATPase), which actively transports Na⺠ions out of the neuron and K⺠ions into the neuron, restoring the original ion concentration gradients. The neuron is then ready to respond to new stimuli and potentially fire another action potential.

In summary, hyperpolarization is a phase following an action potential in which the neuron's membrane potential becomes more negative than the resting potential. This phase contributes to setting the refracttory period, thereby regulating the timing and directionality of action potentials in neuronal signaling.

The duration of the various states associated with an action potential in neurons — depolarization, repolarization, and hyperpolarization — varies depending on the type of neuron and the conditions under which it is operating. However, to provide a general idea, we can discuss typical timeframes for these phases in mammalian neurons under normal physiological conditions.

Depolarization: The depolarization phase is rapid, typically lasting about 1 millisecond (MS). During this phase, voltage-gated sodium channels open, allowing an influx of sodium ions into the neuron, which causes the membrane potential to become more positive.

Repolarization: Following depolarization, repolarization also occurs quickly, usually within about 1 MS. In this phase, the sodium channels start to inactivate, and voltage-gated potassium channels open, allowing potassium ions to flow out of the neuron. This outflow of positively charged ions restores the membrane potential toward its resting negative value.

Hyperpolarization: The hyperpolarization phase is slightly longer, typically lasting a few milliseconds. During this phase, the potassium channels remain open longer than needed just to reach the resting potential, resulting in the membrane potential becoming more negative than the resting level. This is the period during which the neuron is in the refractory state.

Refractory Periods: The refractory period consists of two parts:

• Absolute Refractory Period: This period lasts approximately 1-2 MS during the depolarization and most of the repolarization phase. During this time, the neuron cannot fire another action potential regardless of the strength of the stimulus.

• Relative Refractory Period: This period occurs during the hyperpolarization phase and may last several milliseconds. During this time, it is possible to trigger another action potential, but only with a stronger than normal stimulus.

It's important to note that these durations are approximate and can vary. Factors such as neuron type, temperature, and the specific physiological conditions of the neuron can affect the exact timing of these phases. For instance, in some types of neurons or under different physiological conditions, the duration of these phases can be longer or shorter.

Visual Processing Pipeline

Visual processing is a complex process that involves multiple steps and various neural cell types at each stage. The process begins with the capture of light by the eyes and ends with the perception of images in the brain. Here's an overview of the steps in visual processing and the neural cells involved:

Light Reception in the Retina: Photoreceptors (Rods and Cones): Light first enters the eye and is captured by photoreceptor cells in the retina. Rods are responsible for low-light (scotopic) vision and do not discern color, while cones are responsible for color (photopic) vision and function best in well-lit conditions.

Photoreceptors, comprising rods and cones, are specialized neurons located in the retina, responsible for the initial transduction of light into neural signals. These cells are the primary interface between the visual world and the nervous system, playing a pivotal role in the process of vision. The unique characteristics, functional properties, and types of photoreceptors are essential to understanding the complexity of visual processing.

Photoreceptors are uniquely adapted to capture light and convert it into electrochemical signals. This process begins when light photons strike photopigments within the photoreceptors, triggering a cascade of biochemical reactions that ultimately lead to changes in membrane potential. These changes are then transmitted to downstream neurons in the retina, initiating the complex process of visual perception.

Rods

• Function and Specialization: Rods are highly sensitive to light and are responsible for vision under low-light conditions, a function known as scotopic vision. They are more numerous than cones and are predominantly located in the peripheral regions of the retina.

• Photopigment: The photopigment in rods is rhodopsin, which is particularly sensitive to dim light. Rhodopsin's high sensitivity enables rods to detect low levels of illumination, making them essential for night vision.

• Signal Transduction: Upon absorption of light, rhodopsin undergoes a conformational change, activating a G-protein-coupled receptor pathway that leads to the closure of sodium channels, hyperpolarizing the rod cell.

• Spatial Summation: Rods are often connected to the same bipolar cells, a feature that allows for spatial summation. This convergence enhances light sensitivity but reduces spatial resolution, making rod-mediated vision less detailed but more sensitive to low light levels.

Cones

• Function and Specialization: Cones are responsible for highacuity, color vision under well-lit conditions, known as photopic vision. They are less sensitive to light than rods but provide the ability to discern fine details and color.

• Photopigments: Cones contain one of three different photopigments, each sensitive to different wavelengths of light (short, medium, or long wavelengths), corresponding to blue, green, and red light, respectively. This trichromatic system forms the basis of color vision in humans.

• Signal Transduction: Similar to rods, cones transduce light into neural signals through a G-protein-coupled receptor pathway. However, the photopigments in cones have lower sensitivity to light, requiring brighter illumination for activation.

• Distribution and Acuity: Cones are densely packed in the fovea, the central region of the retina, providing high spatial resolution and visual acuity. This concentration of cones enables detailed and color vision, essential for tasks like reading and recognizing faces.

The distinct characteristics of rods and cones, including their specific photopigments and light sensitivity, as well as their distribution and connectivity patterns in the retina, demonstrate the intricate design of the visual system. The specialized functions of these photoreceptors enable humans to perceive a wide range of lighting conditions and colors, forming the foundation of our rich and dynamic visual experience. The study of rods and cones continues to be a crucial area in vision research, providing insights into the fundamental mechanisms of light perception and potential therapies for retinal diseases.

Preliminary Processing in the Retina

Horizontal Cells: These cells integrate signals from multiple photoreceptors and modulate the input to bipolar cells, contributing to contrast enhancement and spatial processing. Horizontal cells are a distinct type of neuron found in the retina, playing a critical role in the processing of visual information. These cells are interneurons that integrate and modulate signals between photoreceptors (rods and cones) and bipolar cells. Horizontal cells are known for their distinctive features and contribution to several key aspects of visual processing, including contrast enhancement and color perception.

Morphological Characteristics:

• Horizontal cells are characterized by their wide and laterally extended dendritic and axonal arbors, which allow them to connect with numerous photoreceptors and bipolar cells across a broad area of the retina.

• There are typically two main types of horizontal cells based on their morphology and connectivity: Type I (or H1), which connects primarily with cones, and Type II (or H2), which connects with both rods and cones. Some species also have a Type III horizontal cell.

Function in Lateral Inhibition

• One of the primary functions of horizontal cells is to mediate lateral inhibition, a process that sharpens and enhances visual information. By inhibiting the activity of neighboring photoreceptors and bipolar cells, horizontal cells increase the contrast and definition of the visual image.

• Lateral inhibition is crucial for edge detection and the perception of spatial detail. It allows the visual system to detect borders and transitions between light and dark areas more effectively.

Role in Color Perception

• Horizontal cells are also involved in color processing, particularly in the antagonistic center-surround organization of photoreceptor receptive fields. This organization is important for color contrast and the ability to distinguish colors in complex visual scenes.

• By integrating signals from different types of cones, horizontal cells contribute to the processing of color information and help in minimizing chromatic aberration.

Signal Integration and Feedback

• Horizontal cells receive input from photoreceptors and provide feedback to both photoreceptors and bipolar cells. This feedback mechanism modulates the photoreceptor response to light and influences the bipolar cell output, impacting the overall visual processing in the retina.

• The extensive network of horizontal cell connections enables them to integrate visual information over a large area, contributing to the uniformity and consistency of the visual field.

Unusual Features and Adaptation

• Horizontal cells exhibit unique electrophysiological properties, including graded potentials rather than action potentials, allowing them to modulate signal strength continuously. • They play a role in the adaptation of the retina to different lighting conditions. By modulating the sensitivity of photoreceptors and bipolar cells, horizontal cells help the retina adjust to varying levels of illumination.

Bipolar Cells

Bipolar cells receive input from photoreceptors and horizontal cells, and they relay signals to retinal ganglion cells. They are crucial in processing visual information before it is sent to the brain.

Bipolar cells are integral components of the retinal circuitry, playing a crucial role in the processing of visual information within the eye. Situated between the photoreceptors (rods and cones) and the retinal ganglion cells (RGCs), bipolar cells act as intermediaries, conveying and modulating signals from the photoreceptors to the RGCs. The unique characteristics and diverse subtypes of bipolar cells contribute significantly to the initial stages of visual processing.

Bipolar cells are named for their distinct structure, featuring two distinct processes: one dendritic process that receives inputs from photoreceptors and one axonal process that forms synapses with RGCs and, in some cases, amacrine cells. This bipolar structure allows them to effectively transmit and transform visual information from the outer to the inner retina.

One of the key functions of bipolar cells is to process and convey information about light intensity and color from photoreceptors to RGCs. They play a vital role in establishing the basis for contrast sensitivity, color perception, and spatial resolution in vision. Bipolar cells achieve this through various mechanisms:

• Direct and Indirect Pathways: Some bipolar cells establish direct synaptic connections with photoreceptors, providing a pathway for rapid and precise transmission of visual information. Other bipolar cells are connected indirectly through horizontal cells, which modulate the input from photo receptors, contributing to contrast enhancement and center-surround receptive field organization.

• ON and OFF Pathways: Bipolar cells are broadly classified into two types based on their response to light: ON bipolar cells and OFF bipolar cells. ON bipolar cells depolarize (become more excited) in response to an increase in light intensity in their receptive field center, while OFF bipolar cells depolarize in response to a decrease in light intensity. This distinction is crucial for encoding changes in light intensity and contributes to the detection of luminance contrast.

• Subtypes and Specialization: There are multiple subtypes of bipolar cells, each specialized for different aspects of visual processing. Some bipolar cells are connected predominantly to cones and are involved in high-acuity, color vision. Others are connected to rods and are responsible for processing low-light (scotopic) vision. The specific connections and properties of these subtypes enable the retina to process a wide range of visual information under varying lighting conditions.

• Center-Surround Receptive Fields: Similar to RGCs, bipolar cells contribute to the formation of center-surround receptive fields, a fundamental property of retinal processing. This organization enhances the retina's ability to detect edges and contrasts in the visual scene, essential for spatial resolution and pattern recognition.

Bipolar cells are not mere passive conduits in the visual pathway; they play an active and dynamic role in processing visual information. Their diverse subtypes and specialized functions are instrumental in encoding various aspects of the visual scene, such as light intensity, color, and contrast. The complexity and adaptability of bipolar cells underscore the sophistication of the retinal circuitry.

Amacrine Cells

These interneurons interact with bipolar cells and retinal ganglion cells, playing a role in complex visual processing such as motion detection and temporal aspects of vision.

Amacrine cells are a diverse and functionally complex group of neurons located in the inner retina, playing a vital role in the processing and modulation of visual information. These cells are situated at the interface between bipolar cells and retinal ganglion cells (RGCs), and they interact extensively with both. Amacrine cells are known for their wide range of functions, diverse morphologies, and the numerous subtypes that contribute to their role in the visual system.

Unlike other retinal neurons, amacrine cells typically lack axons and communicate through their dendrites, which form extensive networks within the inner plexiform layer (IPL) of the retina. This unique structure allows them to integrate and modulate signals across a broad area of the retina, influencing the output of RGCs.

Amacrine cells are primarily involved in complex image processing tasks within the retina, including:

• Temporal and Spatial Integration: Amacrine cells integrate visual information over time and space, contributing to the detection of motion, changes in light intensity, and the overall dynamic aspects of vision. Their extensive dendritic networks enable them to process information across a wide area, enhancing the retina's ability to detect movement and temporal changes.

• Contrast Enhancement and Edge Detection: By modulating the input to RGCs, amacrine cells play a crucial role in enhancing contrast and facilitating edge detection. This is achieved through lateral inhibition, a process where amacrine cells inhibit neighboring bipolar cells and RGCs, sharpening the visual signal.

• Direction Selectivity and Motion Detection: Some subtypes of amacrine cells are specialized for detecting the direction of motion. These direction-selective amacrine cells are crucial for encoding the direction and speed of moving objects in the visual field.

• Light Adaptation and Sensitivity Regulation: Amacrine cells contribute to the retina's ability to adapt to different lighting conditions. They help regulate the sensitivity of bipolar cells and RGCs, ensuring that the retina can function effectively across a range of light intensities.

• Subtypes and Specialization: There are numerous subtypes of amacrine cells, each with unique morphologies and functions. Some subtypes are involved in specific aspects of color processing, while others modulate the activity of RGCs in response to specific patterns or types of movement. The diversity of amacrine cell subtypes allows for a high degree of specialization in retinal processing.

Transmission to the Brain

Retinal Ganglion Cells (RGCs)

The axons of RGCs form the optic nerve, which carries visual information from the eye to the brain. RGCs are responsible for the initial encoding and transmission of visual signals to the brain. Different types of RGCs process different aspects of the visual field, such as color, contrast, and movement.

Retinal Ganglion Cells (RGCs) are critical components in the visual processing pathway, situated at the final stage of the retinal circuitry. These cells play a pivotal role in converting visual information captured by photoreceptors into neural signals that can be interpreted by the brain. RGCs are not only conduits for transmitting visual data but also actively participate in the initial stages of visual processing. Their diversity in types and functions significantly contributes to the complexity and richness of visual perception.

The primary role of RGCs is to receive and integrate synaptic inputs from bipolar cells and amacrine cells within the retina. The integration of these inputs results in the generation of action potentials, or "spikes," which are then transmitted along the optic nerve to the brain. The nature of these action potentials encodes various aspects of the visual scene, such as light intensity, contrast, color, and motion.

One of the remarkable features of RGCs is their diversity. There are numerous subtypes of RGCs, each specialized for processing different aspects of the visual input. For example, some RGCs are particularly sensitive to specific wavelengths of light, contributing to color vision, while others are more responsive to changes in light intensity or motion. This specialization is essential for the parallel processing of various visual attributes, allowing the brain to construct a detailed and comprehensive visual representation.

RGCs are often classified based on their morphological characteristics, physiological responses, and the types of visual information they process. Commonly recognized types include:

• M-type (Magnocellular) RGCs: These cells are larger and have faster-conducting axons. They are highly sensitive to motion and temporal changes in the visual scene, making them crucial for detecting movement and providing input to the brain's motionprocessing pathways.

• P-type (Parvocellular) RGCs: These cells are smaller and have slower-conducting axons. They are highly sensitive to fine spatial details and color, playing a significant role in high-resolution vision and color discrimination.

• K-type (Koniocellular) RGCs: These cells, interspersed between the M and P layers in the LGN, are involved in processing specific color contrasts, such as blue-yellow.

In addition to these broad categories, there are other RGC subtypes specialized for various functions, such as detecting the overall luminance level, edge detection, and orientation. Some RGCs are specifically tuned to detect certain patterns of movement, such as radial or circular motion, contributing to the perception of complex dynamic scenes.

RGCs also exhibit a center-surround receptive field organization, a fundamental property that enhances the ability to detect contrasts and edges in the visual scene. The center-surround organization allows RGCs to respond preferentially to differences in light intensity between the central and peripheral regions of their receptive fields, which is critical for edge detection and spatial resolution.

Optic Nerve and Optic Chiasm

The optic nerves from both eyes meet at the optic chiasm, where fibers from the nasal (inner) halves of each retina cross to the opposite side of the brain. This arrangement allows visual information from both eyes to be integrated for binocular vision.

The optic nerve and optic chiasm are key structures in the visual pathway, playing essential roles in the transmission and processing of visual information from the eyes to the brain. While often perceived primarily as conduits for visual signals, these structures have unique features and involve specific neuron types that contribute to the overall process of visual perception.

The optic nerve, formed by the axons of retinal ganglion cells (RGCs), is the primary conduit through which visual information exits the eye and travels towards the brain. Each optic nerve corresponds to one eye, carrying the complete visual field perceived by that eye. The RGCs, which form the optic nerve, are diverse in type and function. They vary in their response to different aspects of the visual field, such as color, contrast, light intensity, and movement. This diversity allows the optic nerve to transmit a rich array of visual information for processing in the brain.

As the optic nerves approach the brain, they converge at a junction known as the optic chiasm. This structure is located at the base of the brain, just above the pituitary gland. The optic chiasm is a crucial point in the visual pathway, as it is where the partial decussation (crossing) of fibers from the two optic nerves occurs. Specifically, the axons from the nasal (inner) halves of each retina cross to the opposite side of the brain, while the axons from the temporal (outer) halves remain on the same side. This arrangement ensures that visual information from each eye's temporal field (peripheral vision) is processed on the same side of the brain, while information from the nasal field (central vision) is processed on the opposite side. This crossing is essential for binocular vision and depth perception, as it allows the brain to integrate and compare visual information from both eyes.

Although the optic nerve and optic chiasm are primarily considered as pathways for transmitting visual information, there is evidence suggesting some level of processing may occur within these structures. For instance, studies have indicated that certain types of RGCs can modulate their signal transmission based on ambient light conditions, which could be considered a form of preprocessing before the signals reach the brain. Additionally, the organization and crossing of fibers in the optic chiasm itself are vital for the correct mapping and integration of visual information in the brain.

Lateral Geniculate Nucleus (LGN) in the Thalamus

Neurons in the LGN receive input from RGCs and perform initial processing before relaying information to the primary visual cortex. The LGN has distinct layers that process different types of visual information, such as color and motion.

The Lateral Geniculate Nucleus (LGN), situated within the thalamus, plays a pivotal role in the visual processing pathway. As a primary relay center for visual information, the LGN serves as a critical inter mediary, transferring data from the retinal ganglion cells (RGCs) to the primary visual cortex (V1). The LGN's unique structure, intricate layer organization, and functional properties underscore its significance in the visual system.

Anatomically, the LGN is a bilaterally symmetrical structure, with each hemisphere of the brain housing an LGN that processes information from the contralateral visual field. This paired structure is distinguished by its six-layer composition in primates, including humans. Each layer receives inputs from either the contralateral or ipsilateral eye, with layers 1, 4, and 6 associated with the former and layers 2, 3, and 5 with the latter.

The layers of the LGN are further categorized based on the type of RGC input they receive. The magno-cellular layers (layers 1 and 2) are connected to larger, M-type ganglion cells and are integral to processing motion and coarse spatial information. Conversely, the parvocellular layers (layers 3 through 6) receive inputs from smaller, P-type ganglion cells and are responsible for processing fine spatial details and color. Some primates also possess koniocellular layers, which are interspersed between the main layers and receive inputs from K-type ganglion cells. These layers are believed to be involved in processing blue-yellow color contrast and other aspects of visual information.

Functionally, the LGN is not merely a passive relay station but is actively involved in several aspects of visual processing. It enhances contrast, integrates spatial and temporal visual signals, and plays a role in visual attention regulation and sensory information filtering. The LGN's ability to modulate the flow of visual data to the cortex based on attentional demands and arousal state is particularly notable. Additionally, it maintains a retinotopic map, preserving the spatial organization of the retina in its projection, which is crucial for spatial coherence in visual perception.

The neural circuitry within the LGN is characterized by receptive fields influenced by the center-surround organization of RGCs. This arrangement allows the LGN to effectively detect luminance contrast and facilitate edge detection in visual scenes. Moreover, the LGN receives not only feedforward input from RGCs but also feedback connections from the visual cortex and other brain regions. This suggests its involvement in higher-level visual processing and the cognitive influences on perception.

In its role within the visual pathways, the LGN is instrumental in segregating and processing different visual information aspects, such as motion, color, and form. After processing in the LGN, visual information is conveyed to the primary visual cortex via the optic radiations, establishing the LGN as a critical gateway for cortical visual processing.

Primary Visual Cortex (V1)

V1

• Neurons in the primary visual cortex, located in the occipital lobe of the brain, are responsible for further processing and interpreting visual information. Cells in V1, such as simple cells, complex cells, and hypercomplex cells, extract features like edges, orientation, and movement from the visual scene.

• V1 is organized retinotopically, meaning there is a mapping of the visual field onto the cortical surface.

The primary visual cortex (V1), also known as the striate cortex or area 17, is a critical region in the brain for the processing of visual information. Located in the occipital lobe, V1 is the first cortical area to receive visual input from the eyes via the lateral geniculate nucleus (LGN) of the thalamus. This area is fundamental in the hierarchical processing of visual stimuli, and it exhibits unique features and specialized neuron types that are essential for the interpretation and perception of visual information.

Structural Organization

• V1 is characterized by its distinct layered structure, typically comprising six layers, each with specific types of neurons and functions. These layers receive and process visual information, with different layers having different connections and roles in visual processing.

• The surface of V1 exhibits a striped appearance due to the presence of stria of Gennari, a band of myelinated axons, which is a distinguishing feature of this region.

Functional Specialization

• Neurons in V1 are specialized for processing various aspects of the visual scene, such as orientation, spatial frequency, color, and motion. This specialization is evident in the properties of different types of neurons found in V1.

• V1 maintains a retinotopic map, meaning that there is a systematic spatial correspondence between the retina and the representation of the visual field in the cortex. This mapping is essential for preserving the spatial structure of the visual scene.

Neuron Types in V1

• Simple Cells: These neurons respond best to bars or edges of specific orientations within their receptive fields. They have distinct excitatory and inhibitory regions and are thought to be critical for edge detection and orientation selectivity.

• Complex Cells: Complex cells also respond to specific orientations but have larger receptive fields and are less sensitive to the exact position of the stimulus within the field. They are important for detecting moving edges and patterns.

• Hypercomplex Cells: Also known as end-stopped cells, hypercomplex cells are sensitive to the length of a stimulus in addition to its orientation. They play a role in perceiving the ends of objects and detecting corners and angles.

Columnar Organization

V1 exhibits a columnar organization, where neurons with similar properties (such as orientation preference) are arranged in vertical columns that extend through the layers of the cortex. This organization facilitates the integration of information about specific visual attributes.

Unusual Features and Processing

• V1 is involved in the initial stages of parallel processing of visual information, where different features of the visual scene are processed simultaneously in separate pathways. For instance, the dorsal stream (processing "where" and "how") and the ventral stream (processing "what") originate from

V1. • The processing in V1 is influenced not only by feedforward input from the LGN but also by feedback from higher-order visual areas and other cortical regions. This feedback can modulate the activity of V1 neurons, influencing perception and attention.

V1 Hands off to Vn: The processed visual information from V1 is sent to secondary and tertiary visual areas for higher-level processing. These areas include V2, V3, V4, and V5/MT (middle temporal), each specialized for processing different aspects of vision such as color (V4) and motion (V5/MT).

V2

The V2, or Secondary Visual Cortex, is a crucial area in the cerebral cortex for the processing of visual information. Located adjacent to the primary visual cortex (V1) in the occipital lobe, V2 serves as a key relay point in the visual processing pathway, receiving and further refining the visual inputs from V1. This area exhibits unique features and specialized neuron types that enable it to contribute significantly to various aspects of visual perception.

Structural and Functional Organization

• V2 is characterized by its distinct cytoarchitectonic structure and is larger compared to V1. It maintains a retinotopic organization, similar to V1, ensuring the spatial mapping of the visual field.

• The area is divided into different functional zones, often referred to as stripes: thin stripes, thick stripes, and inter-stripes. Each of these stripes is specialized for processing different types of visual information, contributing to the modular organization of V2.

Processing in V2

• V2 plays a pivotal role in further processing the visual information relayed from V1. This includes the refinement of basic visual attributes such as orientation, spatial frequency, and color, as well as the integration of these attributes to perceive more complex patterns and forms.

• V2 is involved in processing both low-level and higher-order visual features, including the interpre tation of illusory contours, figure-ground segregation, and depth perception through binocular disparity.

Neuron Types and Functional Properties

• Neurons in V2 exhibit diverse response properties and are more complex compared to those in V1. These neurons can be sensitive to specific orientations, spatial frequencies, and colors, and they also respond to more complex stimuli such as angles, corners, and curved lines.

• V2 contains neurons that are specialized in processing binocular disparity, which is crucial for stereoscopic depth perception. These neurons help in constructing a three-dimensional representation of the visual environment.

Role in Visual Pathways

• V2 serves as an important intermediary in both the dorsal and ventral streams of visual processing. The dorsal stream, projecting towards the parietal cortex, is involved in processing spatial location and motion, while the ventral stream, projecting towards the temporal cortex, is involved in object recognition and form analysis.

• The integration of information in V2 contributes to the segregation of these two streams, each of which processes different aspects of the visual scene.

Unusual Features and Connectivity

• V2 exhibits complex intrinsic connectivity, with extensive horizontal connections within the cortex that facilitate the integration of information across different functional zones.

• V2 also receives feedback inputs from higher-order visual areas, indicating its involvement in top-down processing and the influence of cognitive factors such as attention and expectation on visual perception.

V3

The V3, or Tertiary Visual Cortex, is an important region in the brain's visual processing network. Located in the occipital lobe and adjacent to the primary (V1) and secondary (V2) visual cortices, V3 plays a significant role in the interpretation and integration of visual information. This area is distinguished by its unique neuron types, functional properties, and contributions to specific aspects of visual perception.

Structural and Functional Organization

• V3 is part of the larger visual processing system and maintains a retinotopic organization, similar to V1 and V2, which helps in mapping the visual field spatially onto the cortical surface.

• The functional role of V3 is diverse, and it is believed to contribute to both the dorsal and ventral streams of visual processing. The dorsal stream, associated with spatial awareness and motion processing, and the ventral stream, associated with object recognition and form processing, both receive inputs from V3.

Processing in V3

• V3 is involved in the processing of complex visual stimuli, including the perception of motion, depth, and form. It plays a role in integrating visual information from V1 and V2 and further elaborating on this information.

• V3 neurons contribute to the understanding of global motion patterns and the perception of the speed and direction of moving objects. This is crucial for navigating dynamic environments and interacting with moving objects.

Neuron Types and Functional Properties

• Neurons in V3 exhibit diverse response properties, with some neurons being particularly sensitive to specific orientations, spatial frequencies, and motion directions. These neurons help in detecting and inter preting complex visual patterns and motion.

• V3 contains neurons that are involved in the processing of binocular disparity, contributing to stereoscopic depth perception. This feature allows for the perception of three-dimensional depth and structure in the visual scene.

Role in Visual Pathways

• V3 serves as an important node in the visual processing pathways, linking the initial processing in V1 and V2 with more complex interpretation in higher-order visual areas.

• The integration of information in V3 is critical for the segregation and specialization of the dorsal and ventral streams, each processing different aspects of the visual scene.

Unusual Features and Connectivity

• V3 exhibits complex intrinsic connectivity, with extensive horizontal connections within the cortex. These connections facilitate the integration of information across different areas of V3, contributing to the holistic processing of visual stimuli.

• V3 also receives feedback inputs from higher-order visual areas, indicating its involvement in top-down processing. This suggests that cognitive factors such as attention, expectation, and prior experience can influence visual perception at the level of V3.

V4

V4 is a significant area within the visual cortex, playing a crucial role in the processing of visual information, particularly in the aspects of color and form perception. Situated along the ventral stream of the visual pathway, which is involved in object recognition and detailed visual analysis, V4 exhibits unique neuron types and functional properties that contribute to its specialized role in visual perception.

Functional Specialization

• V4 is primarily involved in processing color and form, including complex geometric patterns and object features. This specialization is essential for the perception of a detailed and colorful visual world.

• The area is also implicated in the processing of spatial attention and the integration of visual information with other sensory modalities, contributing to the creation of a coherent sensory experience.

Neuron Types and Properties

• Neurons in V4 exhibit diverse response characteristics, with many being highly sensitive to specific aspects of visual stimuli. This includes sensitivity to color, shape, orientation, and spatial frequency.

• Color processing in V4 is particularly notable. Neurons here are involved in color constancy, the ability to perceive consistent colors under varying lighting conditions. These neurons can integrate information about the spectral properties of light and the context of the visual scene to maintain stable color perception.

• Additionally, V4 neurons contribute to the perception of complex shapes and patterns, responding to a range of geometric forms and visual textures.

Role in Visual Processing Pathways

• V4 serves as an intermediate processing stage in the ventral stream, receiving inputs from lower-level visual areas like V2 and V3 and sending outputs to higher-order areas involved in object recognition and memory, such as the inferior temporal cortex.

• The integration of visual information in V4 is critical for the recognition and interpretation of objects and scenes, bridging the gap between basic visual features and complex perceptual constructs.

Unusual Features and Connectivity

• V4 exhibits a high degree of intra-areal connectivity, with extensive horizontal connections that facilitate the integration of information across different parts of the visual field. This connectivity is important for the holistic perception of objects and scenes.

• V4 also receives feedback inputs from higher-order visual and cognitive areas, suggesting that perception in V4 is influenced by factors such as attention, expectation, and experience.

• V4 offers valuable insights into the neural mechanisms underlying color perception, object recognition, and the integration of visual information, and it has important implications for understanding visual perception, cognition, and disorders affecting vision.

V5/MT

V5/MT, also known as the Middle Temporal visual area, is a crucial region in the visual cortex that specializes in the processing of motion. Located along the dorsal stream of the visual processing pathway, V5/MT plays a pivotal role in perceiving and interpreting motion, an essential aspect of visual cognition and navigation. The unique neuron types and functional properties of V5/MT underscore its significance in the neural network responsible for visual perception.

Functional Specialization

• V5/MT is primarily dedicated to the detection and analysis of motion within the visual field. It processes information about the direction, speed, and coherence of moving objects, contributing to the perception of fluid motion and dynamic scenes.

• The area is also involved in the perception of motion in depth, which is crucial for navigating and interacting with a threedimensional environment.

Neuron Types and Properties

• Neurons in V5/MT exhibit specialized response characteristics, with many being selectively sensitive to specific directions and speeds of motion. This selectivity allows V5/MT to encode detailed information about the dynamics of moving objects.

• V5/MT contains direction-selective neurons, which respond preferentially to movement in particular directions. This feature is critical for discerning the trajectory and orientation of moving objects.

• Neurons in V5/MT also contribute to the processing of motion coherence, the ability to detect uniform motion patterns within a field of random motion, which is important for distinguishing moving objects from their backgrounds.

Role in Visual Processing Pathways

• V5/MT is a key component of the dorsal stream, which is associated with the processing of spatial location and movement. It receives inputs from earlier visual areas, including V1 and V2, and integrates this information to construct a comprehensive representation of motion.

• The output from V5/MT is sent to higher-order areas involved in motion analysis and visually guided actions, such as the posterior parietal cortex.

Unusual Features and Connectivity

• V5/MT exhibits a high degree of intra-areal connectivity, with extensive horizontal connections that enable the integration of motion information across different regions of the visual field. This connectivity is essential for the holistic perception of motion and the coordination of visually guided actions.

• V5/MT is also characterized by its strong interconnections with other areas of the dorsal stream, as well as feedback connections from higher-order cognitive and motor regions. These connections suggest that the processing of motion in V5/MT is influenced by a range of factors, including attention, prediction, and behavioral context.

Involvement in Visual Illusions and Disorders

• V5/MT is noteworthy for its involvement in certain visual illusions related to motion, such as the perception of movement in static images. These illusions highlight the complex neural mechanisms underlying motion perception.

• Additionally, abnormalities in V5/MT have been implicated in motion perception disorders, providing insights into conditions like akinetopsia, where individuals have difficulty perceiving motion.

• V5/MT offers valuable insights into the neural mechanisms underlying motion perception and has important implications for understanding visual perception, cognition, and disorders affecting motion processing in the visual system.

These higher visual areas are interconnected and also communicate with other parts of the brain, such as the parietal and temporal lobes, for integrating visual information with memory, spatial awareness, and other sensory modalities.

Visual processing involves a highly integrated and complex network of neurons and brain regions, each contributing to the creation of a coherent and detailed visual perception. This process exemplifies the remarkable capabilities of the nervous system in processing and interpreting sensory information.

Structure of Neurons

A "typical" neuron, the fundamental building block of the nervous system, is characterized by four distinct regions, each playing a crucial role in the neuron's function. Understanding the structure and function of these regions is key to comprehending how neurons communicate and process information.

The first region is the cell body, also known as the soma. The cell body is the metabolic and organizational hub of the neuron, responsible for maintaining the cell's health and functionality. It houses the nucleus, which contains the cell's genetic material and controls protein synthesis. The cell body also functions as the neuron's manufacturing and recycling plant, where essential neuronal proteins and other components are synthesized and processed. This includes the production of neurotransmitters, enzymes, and structural proteins necessary for the neuron's operation.

The second and third regions of a neuron are its processes, which are structures that extend away from the cell body. These processes serve as conduits for signals to flow to or away from the cell body. The incoming signals from other neurons are typically received through the neuron's dendrites. Dendrites are branched, tree-like structures that provide a large surface area for receiving synaptic inputs from other neurons. Each neuron may have numerous dendrites, each covered in synaptic sites where neurotransmitters from other neurons can bind and influence the neuron's activity. The dendrites play a critical role in integrating these incoming signals and transmitting them to the cell body.

The outgoing signal to other neurons flows along the neuron's axon. A neuron typically has only one axon, but this axon can branch extensively to communicate with multiple target neurons. The axon is a long, slender projection that conducts electrical impulses, known as action potentials, away from the cell body. The axon's length and myelination (in some neurons) enable rapid transmission of these electrical signals over long distances.

The fourth distinct region of a neuron is found at the end of the axon: the axon terminals, also known as terminal boutons. These structures contain synaptic vesicles filled with neurotransmitters, the chemical messengers used for communication between neurons. When an action potential reaches the axon terminals, it triggers the release of neurotransmitters into the synaptic cleft, the small gap between neurons. The neurotransmitters then bind to receptor sites on the dendrites or cell bodies of the receiving neuron, facilitating the transmission of signals across the synapse.

Neurotransmitters play a crucial role in neuronal communication, allowing signals to flow from one neuron to the next at chemical synapses. The type and amount of neurotransmitter released, along with the specific receptors on the receiving neuron, determine the nature of the signal transmitted — whether it is excitatory or inhibitory, for instance. This chemical communication is fundamental to the functioning of neural networks and underlies all neural processes, from basic reflexes to complex cognitive tasks.

Types of Neurons This diversity is reflected in their morphology, electrophysiological properties, and the roles they play within neural circuits. The different types of neurons have distinct characteristics that affect their individual behavior and contributions to overall neural function. Here, we explore some major types of neurons and how their unique features influence their behavior.

Sensory Neurons

• Sensory neurons, also known as afferent neurons, are responsible for converting external stimuli from the environment into internal electrical impulses. These neurons are typically found in sensory organs such as the eyes, ears, skin, and nose.

• The unique feature of sensory neurons is their ability to respond to specific types of sensory inputs, such as light, sound, touch, or chemical signals. Their behavior is characterized by the translation of these stimuli into nerve signals that are relayed to the brain or spinal cord for processing.

Motor Neurons

• Motor neurons, or efferent neurons, carry signals from the central nervous system to muscles and glands, facilitating movement and action. They are key players in the motor system and are involved in both voluntary and involuntary actions.

• The behavior of motor neurons is marked by their ability to stimulate muscle contraction or gland secretion. They receive signals from the brain or spinal cord and translate them into specific actions, such as muscle movement or glandular responses.

Interneurons

• Interneurons are neurons that are located entirely within the central nervous system and serve as connectors between other neurons. They are the most numerous type of neuron and play critical roles in information processing and signal integration.

• The behavior of interneurons is diverse, as they are involved in various functions such as reflexes, neural oscillations, and complex cognitive processes. Their ability to form extensive networks allows them to integrate signals from multiple sources, modulate neural circuits, and influence the output of motor neurons.

Pyramidal Neurons

Pyramidal neurons are a type of excitatory neuron found in the cerebral cortex and hippocampus. They are characterized by a pyramid-shaped cell body and a single long axon. The behavior of pyramidal neurons is crucial for cognitive functions such as learning, memory, and decision-making. They are involved in the transmission of signals within the cortex and between the cortex and other brain regions. The unique structure of pyramidal neurons, with their extensive dendritic trees, allows them to receive and integrate numerous inputs from other neurons. The double pyramidal cell, a specific type of neuron found in the cerebral cortex, exhibits unique structural and functional characteristics. While there isn't direct information available on "double pyramidal cells" specifically, insights can be drawn from the general understanding of pyramidal cells in the cerebral cortex.

Structure and Characteristics of Pyramidal Cells Morphology

• Pyramidal cells are the most abundant type of neuron in the cerebral cortex.

• They are characterized by a pyramid-shaped cell body, a large apical dendrite extending towards the cortical surface, multiple basal dendrites, and an axon that projects to various cortical and subcortical areas.

Dendritic Structure

The dendrites are covered in spines, which are sites of synaptic input.

• The apical dendrite typically branches as it ascends and may have additional oblique branches.

• The complexity of dendritic arborization varies among pyramidal cells in different cortical areas and among species, reflecting their functional diversity

Axonal Projections

• Axons can extend over long distances, facilitating communication between different brain regions.

• Pyramidal cells in different cortical layers have distinct projection patterns. For example, cells in layer V often project to subcortical structures, while those in layer III might project to other cortical areas.

Electrophysiological Properties

• Pyramidal cells exhibit diverse firing patterns, including regular spiking and bursting, which are crucial for information processing and transmission in neural circuits.

• They typically exhibit excitatory neurotransmission, primarily using glutamate as a neurotransmitter.

Functional Role

• Pyramidal neurons play a key role in cognitive functions like sensory perception, motor control, and complex thought processes.

• They are involved in synaptic plasticity mechanisms, which are fundamental for learning and memory.

Variations Among Species

Comparative studies show that pyramidal cells vary markedly in structure among different species and cortical regions, suggesting adaptations to specific cognitive functions.

Implications of Structural and Functional Diversity

The diversity in the structure and function of pyramidal cells, including those with atypical features like double pyramidal cells, underscores the complexity of neural processing in the cerebral cortex. This diversity allows for a range of computational capabilities and adaptive responses to environmental inputs. Understanding these variations can provide insights into the cellular basis of cognitive functions and the pathophysiology of neurological disorders.

The available literature provides a detailed view of the pyramidal neurons’ characteristics, although specific information on "double pyramidal cells" as a distinct subtype may require further research for precise details.

Certainly, let's delve deeper into the unusual characteristics and firing patterns of double pyramidal cells:

Unusual Characteristics

Dendritic Orientation: One of the most unusual characteristics of double pyramidal cells is their dendritic structure, with two opposing dendritic tufts. This contrasts with typical pyramidal neurons that have a single apical dendrite extending towards the cortical surface. The bipolar dendritic orientation of double pyramidal cells allows them to potentially integrate information from different cortical layers or areas.

Axonal Projections: The axons of double pyramidal cells may have distinct projection patterns compared to other pyramidal neurons. They might innervate local as well as distant cortical regions, contributing to both local circuitry and long-range cortical connections.

Synaptic Specificity: These cells might show specific patterns of synaptic connectivity, receiving inputs from distinct types of neurons or specific cortical layers, which could influence how they process and relay information.

Molecular and Genetic Markers: There may be unique molecular or genetic markers that distinguish double pyramidal cells from other neuron types, which can be important for understanding their development, function, and involvement in diseases.

Firing Patterns

Response to Stimulation: The firing patterns of double pyramidal cells in response to synaptic stimulation can provide insights into their functional role. These cells might exhibit distinct responses to excitatory and inhibitory inputs compared to other pyramidal neurons.

Spontaneous Activity: The spontaneous firing rate and pattern of these neurons, observed in the absence of external stimuli, can indicate their baseline activity and role in maintaining cortical network dynamics.

Adaptation and Plasticity: The ability of double pyramidal cells to adapt their firing patterns in response to changes in synaptic input or environmental conditions is crucial for understanding their role in learning and memory processes.

Pathological Conditions: Alterations in the firing patterns of double pyramidal cells can be associated with various neurological conditions. For instance, changes in their excitability or responsiveness could be linked to epilepsy, autism, or other cortical dysfunctions.

Comparative Analysis: Comparing the firing patterns of double pyramidal cells with those of other cortical neurons can reveal how these unique cells contribute to the overall function of the cerebral cortex.

Studying the unusual characteristics and firing patterns of double pyramidal cells is key to understanding their contribution to cortical processing and functionality. These aspects are particularly relevant in the context of sensory integration, cognitive processing, and the potential involvement of these cells in neurological disorders. As research progresses, it is likely that more detailed insights into the unique properties of double pyramidal cells will emerge, enhancing our understanding of cortical neurobiology.

Purkinje Neurons

• Purkinje neurons are found in the cerebellum and are among the largest neurons in the brain. They have an elaborate dendritic arbor that forms a flat, fan-like structure.

• The behavior of Purkinje neurons is central to motor coordination and balance. They receive inputs from various sources, including sensory and motor systems, and play a key role in refining motor movements. The distinctive architecture of their dendritic trees enables them to process a vast amount of information and modulate the activity of the cerebellar circuits.

Inhibitory Neurons

• Inhibitory neurons, such as basket cells and chandelier cells, primarily use neurotransmitters like gamma-aminobutyric acid (GABA) to inhibit the activity of other neurons. They are essential for regulating the excitability of neural circuits.

• The behavior of inhibitory neurons is characterized by their ability to control and balance the excitation within neural networks. They prevent excessive neuronal firing and maintain the stability of neural circuits, which is crucial for preventing disorders like epilepsy.

Sensory neurons specialize in translating external stimuli into neural signals, while motor neurons are involved in eliciting responses and actions. Interneurons, with their extensive connectivity, integrate and modulate signals within neural circuits. Pyramidal neurons, primarily found in the cerebral cortex, play key roles in higher cognitive functions. Purkinje neurons, with their elaborate dendritic trees, are central to motor coordination in the cerebellum. Inhibitory neurons, such as basket and chandelier cells, regulate neural excitability and maintain the balance of excitation and inhibition in the brain.

Each type of neuron contributes uniquely to the overall functioning of the nervous system, and their individual behaviors are shaped by their specific structural and functional attributes. Understanding the diverse types of neurons and their distinct roles is essential for unraveling the complexities of neural circuits and for advancing our knowledge of brain function and neural disorders. The intricate interplay among different neuron types facilitates the remarkable capabilities of the brain, from basic sensory processing to complex cognitive tasks.

Neural Response

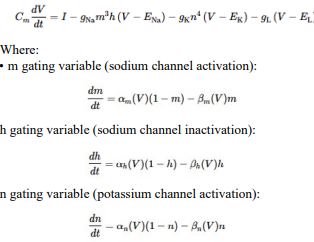

In response to a voltage input, a biological neuron goes through a series of processes that include spike production (action potential generation), decay of sensitivity with repeated firings (accommodation or adaptation), and changes in the threshold. Here's a detailed description of each step:

Response to Voltage Input When a voltage input, such as synaptic input or an externally applied voltage changes, depolarizes a neuron's membrane potential, it moves closer to the firing threshold.

Spike Production (Action Potential Generation) If the depolarization is strong enough to reach the neuron's firing threshold, voltage-gated sodium (Na (^+)) channels open, causing a rapid influx of Na (^+) ions. This influx further depolarizes the membrane, leading to the rapid rising phase of the action potential. After reaching a peak, the membrane begins to repolarize as voltage-gated potassium (K (^+)) channels open, allowing K (^+) ions to exit the neuron. This repolarization returns the membrane potential towards the resting level. The neuron may briefly hyperpolarize (undershoot the resting potential) before returning to its resting state, at which point it's ready to respond to new inputs.

Decay of Sensitivity with Repeated Firings (Adaptation) With repeated or sustained stimulation, a neuron can exhibit a decrease in responsiveness, known as accommodation or adaptation. This is often due to the activation of various ion channels that counteract depolarization or due to inactivation of Na (^+) channels. This process makes it harder for the neuron to reach the firing threshold with subsequent stimuli, effectively increasing the threshold or reducing the neuron's excitability temporarily.

Threshold Changes The firing threshold of a neuron is not static and can change based on the neuron's recent activity and the balance of excitatory and inhibitory inputs it receives. After repetitive firing, certain ion channels (like calcium-activated potassium channels) may be activated, which can hyperpolarize the membrane or stabilize it, making it harder to depolarize to the threshold. Conversely, certain neuromodulators or synaptic inputs can lower the firing threshold, making the neuron more likely to fire in response to subsequent inputs.

Global Mechanisms

Global mechanisms for neural control are crucial in regulating and coordinating neural activity across various regions of the brain and nervous system. These overarching processes and systems play an essential role in maintaining the balance and functionality of neural circuits, ensuring that the nervous system operates in a coordinated and adaptive manner. Several key global mechanisms work in concert to achieve this regulation:

Neuromodulation is a significant global mechanism that involves the release of neuromodulators, chemicals that can modify the activity of neurons and neural circuits across the brain. Neuromodulators, such as dopamine, serotonin, acetylcholine, and norepinephrine, differ from neurotransmitters in that they often have widespread effects, impacting multiple brain regions and neural pathways simultaneously. This allows neuromodulators to play a crucial role in modulating a range of brain functions, including mood, arousal, attention, and motivation.

Hormonal regulation, mediated by the endocrine system, is another global mechanism that exerts a broad influence on brain function and behavior. Hormones like cortisol, estrogen, testosterone, and thyroid hormones can affect a wide array of neural processes, from brain development and neural plasticity to mood regulation and stress responses. These hormones are released into the bloodstream and can affect neurons and neural circuits throughout the brain and body, providing a systemic means of neural regulation.

The reticular activating system (RAS), located in the brainstem, is essential in regulating wakefulness and alertness. The RAS sends projections to various parts of the brain, including the thalamus and cortex, influencing the overall level of consciousness and attention. It acts as a gatekeeper for sensory information, filtering incoming stimuli, and prioritizing those that require attention. By maintaining an optimal level of arousal and responsiveness, the RAS ensures that individuals remain attentive and responsive to their environment.

The autonomic nervous system (ANS), comprising the sympathetic and parasympathetic divisions, is a key player in global neural control. The ANS regulates involuntary bodily functions such as heart rate, blood pressure, digestion, and respiratory rate. It operates globally to maintain homeostasis and to respond appropriately to stressors, modulating the activity of various organs and systems in response to internal and external stimuli.

Circadian rhythms, the 24-hour cycles that regulate physiological and behavioral processes, including sleep-wake cycles, hormone release and metabolism, also constitute a global control mechanism. The suprachiasmatic nucleus (SCN) in the hypothalamus acts as the body's master circadian clock, coordinating these rhythms across different tissues and organs. This regulation ensures that internal processes are synchronized with external environmental cues, such as light and darkness.

Additionally, higher brain regions, particularly the prefrontal cortex, exert top-down control over other parts of the brain. This control allows for the regulation of complex cognitive processes, emotional responses, and goal-directed behaviors. Through topdown mechanisms, the brain can prioritize and modulate sensory information, influence decision-making processes, and regulate emotional and behavioral responses based on the context and individual goals.

Global mechanisms for neural control encompass a range of processes and systems that collectively ensure the coordinated and adaptive functioning of the nervous system. These mechanisms include neuromodulation, hormonal regulation, the reticular activating system, the autonomic nervous system, circadian rhythms, and top-down control from higher brain regions. Together, they maintain homeostasis, regulate physiological and psychological processes, and enable complex behaviors and cognitive functions. The global systems highlight the remarkable ability of the nervous system to integrate and respond to a vast array of stimuli.

Prior Work in Video Processing with Spike Neurons

The use of Liquid State Machines (LSMs) in video processing has been explored in various studies, focusing on different aspects such as movement prediction, real-time processing, and action recognition. Here are some key papers on this topic:

• Movement Prediction from Real-World Images: Introduced an approach using LSM combined with supervised learning algorithms to predict object trajectories, specifically ball trajectories, in robotics based on video data from a camera mounted on a robot.

• Real-Time Processing and Spatio-Temporal Data: Discussed a digital neuromorphic design of LSM for real-time processing of spatiotemporal data, focusing on its potential in tasks dependent on a system's behavior over time. This work highlights the capability of LSMs in handling complex data streams, such as those found in video processing.

• Parallelized LSM for Action Detection in Videos: Presented a novel Parallelized LSM (PLSM) architecture for classifying unintentional actions in video clips. This study demon started the effectiveness of PLSM in outperforming both self-supervised models and traditional deep learning models in video processing tasks.

• Deep Liquid State Machine (D-LSM) for Computer Vision: Proposed the Deep Liquid State Machine (D-LSM), which integrates the capabilities of recurrent spiking networks with deep architectures. This model aims to enhance the extraction of spatio-temporal features from input, relevant to video processing applications.

These studies showcase the diverse applications and advancements of LSMs in the field of video processing, highlighting their potential in predicting movements, processing real-time data, and detecting actions in video streams.

Spike Modulated Artificial Neuron

Membrane Potential

The neuron generates spikes in response to incoming voltage. This voltage is considered, in biological terms, the electrical potential difference across the neuronal membrane, which results from the distribution of ions (such as sodium, potassium, chloride, and calcium) between the inside and outside of the neuron. From our biomimicry perspective, this is a continuous value.

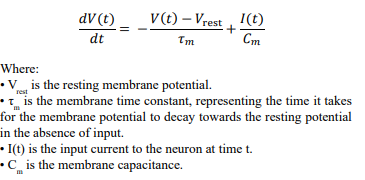

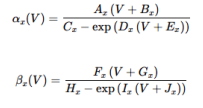

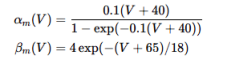

The membrane potential

V (t) = max(0, V (t − 1) + I(t) − δ ⋅ V (t − 1))

Where:

•V (t) is the membrane potential at time t.

• V (t − 1) is the membrane potential from the previous time step.

• I is the voltage input.

• δ is the membrane decay rate.

Spiking versus Graded Potentials

In the visual pathway, different types of cells use distinct mechanisms for signaling, with some utilizing action potentials or "spikes," and others relying on graded potentials. Here's an overview of how various cells in the visual pathway use these signaling mechanisms:

Cells Using Action Potentials (Spikes)

• Retinal Ganglion Cells (RGCs): RGCs use action potentials to transmit visual information from the retina to the brain. The spikes generated by RGCs carry the processed visual information along the optic nerve to the lateral geniculate nucleus (LGN) and then to the visual cortex.

• Neurons in the Lateral Geniculate Nucleus (LGN): Neurons in the LGN use action potentials to relay visual information from the optic nerve to the visual cortex.

• Neurons in the Visual Cortex (V1, V2, V3, V4, V5/MT, etc.): Neurons in the various areas of the visual cortex, including the primary visual cortex (V1) and higher visual areas, use action potentials to process and transmit visual information within the cortex and to other brain regions.

Cells Using Graded Potentials

• Photoreceptors (Rods and Cones): Photoreceptors use graded potentials to respond to light. When activated by light, they undergo hyperpolarization, which modulates the release of neurotransmitters to bipolar cells. Photoreceptors do not generate action potentials.

• Bipolar Cells: Bipolar cells in the retina also use graded potentials to transmit signals from photoreceptors to retinal ganglion cells. The amount of neurotransmitter release by bipolar cells is proportional to the degree of their membrane potential change.

• Horizontal Cells: Horizontal cells use graded potentials to modulate the input from photoreceptors to bipolar cells, contributing to lateral inhibition and contrast enhancement in the retina.

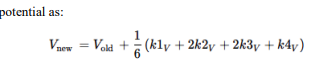

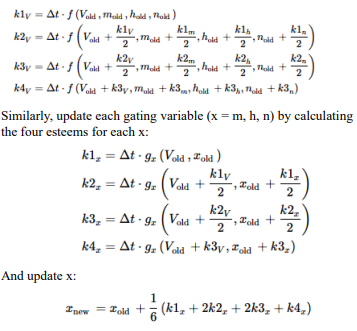

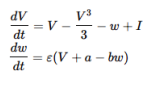

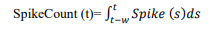

• Amacrine Cells: Amacrine cells use graded potentials to influence the activity of bipolar cells and retinal ganglion cells. They play a role in various aspects of visual processing, such as motion detection, adaptation, and temporal integration.